As artificial intelligence transforms industries, businesses face growing pressure to deploy solutions that are both innovative and secure. Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) systems have emerged as powerful tools for handling complex tasks like contextual information retrieval, automated customer support, and content generation. However, the infrastructure requirements for these technologies are immense. They demand not only computational power but also stringent data security measures and scalability. Private cloud provides the perfect foundation for these needs, offering a balance of security, performance, and flexibility.

Secure LLM and RAG Implementations

LLMs and RAG workflows often require access to proprietary or sensitive data for training and fine-tuning. Unlike public cloud solutions, private cloud ensures that these datasets remain entirely within an organization’s control. This is critical not just for mitigating risks but also for adhering to regulations like GDPR or HIPAA. With private cloud, organizations can enforce rigorous access control policies, implement multi-factor authentication, and encrypt traffic, safeguarding their AI workflows against breaches or unauthorized access.

At the same time, private cloud infrastructure is uniquely equipped to handle the high computational demands of LLMs and RAG pipelines. By combining powerful GPUs with low-latency connections and tailored configurations, private cloud delivers the performance required to process large datasets and run complex AI models efficiently. This eliminates bottlenecks and ensures that both training and real-time inference tasks operate smoothly, regardless of scale.

Accelerating AI Projects with Jupyter Notebooks

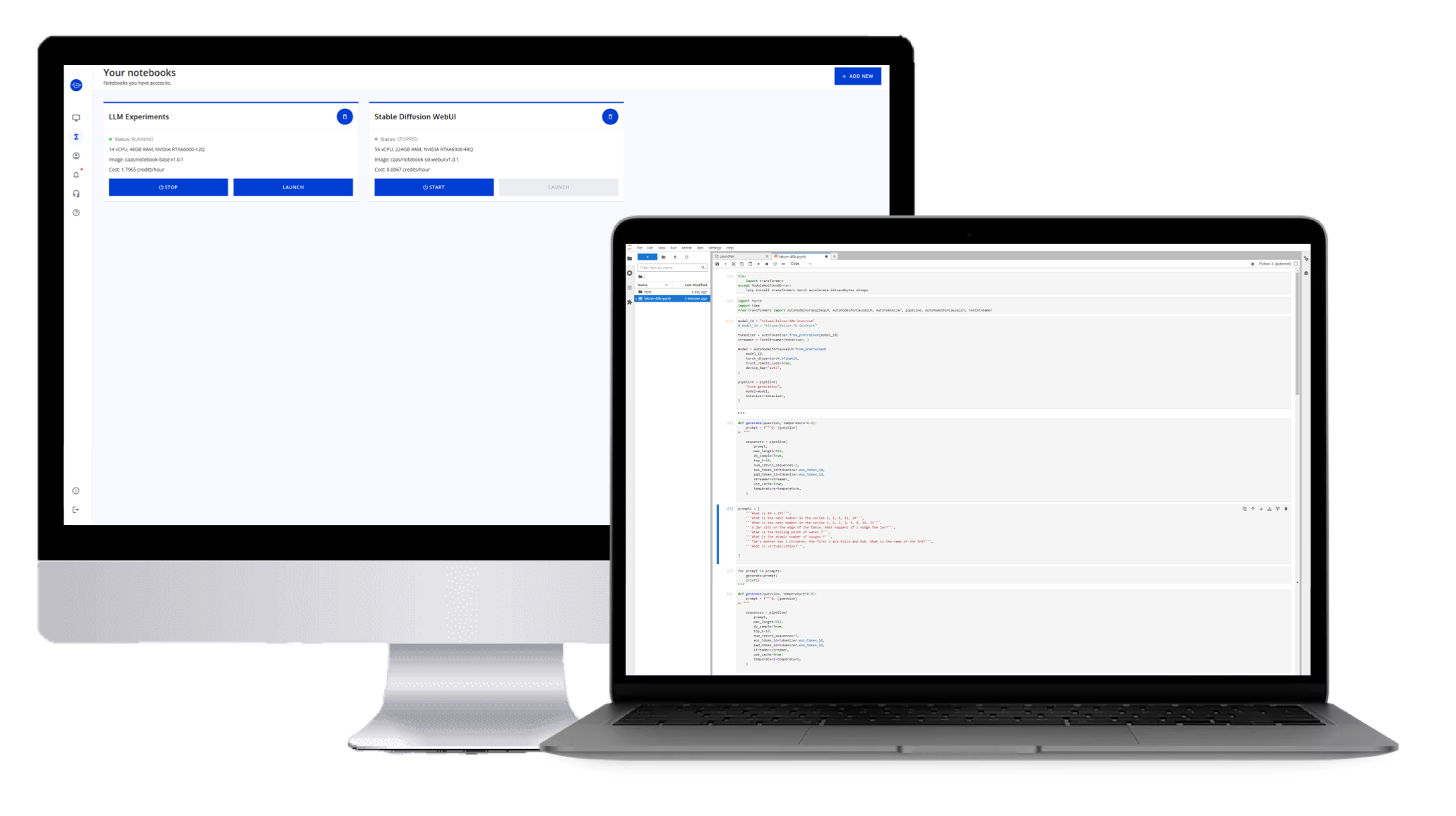

One of the most challenging aspects of AI development is ensuring collaboration across teams while maintaining security. Jupyter Lab, a widely adopted environment for data analysis and machine learning, is one of the best platforms for experimentation and iterative development, providing an interactive, flexible space for data scientists to refine their models and test ideas. Cloudalize enhances this experience with our GPU-Powered Notebooks, built on the industry-standard Jupyter Lab platform, enabling faster processing and seamless scaling for complex AI tasks.

Cloudalize’s solution provides seamless access to on-demand NVIDIA GPU instances through a simple browser-based interface. This means data scientists can begin working immediately without spending time on tedious setup processes. The pre-configured environment eliminates barriers, enabling users to focus entirely on refining their LLMs or RAG systems.

The platform is also pre-loaded with popular tools such as Gradio-powered web UIs, including Automatic1111 for AI-based image generation and Oobabooga for deploying Large Language Models. These ready-to-use integrations make it easy to test workflows and deploy solutions quickly, streamlining the process of turning ideas into operational AI models.

Performance, Scalability, and Cost Transparency

Deploying AI workloads in a private cloud offers another critical advantage: predictable and scalable infrastructure. Unlike public cloud environments where costs can spiral due to data egress fees or fluctuating usage rates, private cloud delivers cost transparency. Organizations can plan their budgets with confidence while scaling GPU resources up or down as needed.

Cloudalize’s private cloud is designed for flexibility, allowing businesses to accommodate growth without downtime. Whether adding more GPU capacity for training larger LLMs or adjusting compute power to support expanded RAG deployments, the platform adapts seamlessly to changing requirements.

Conclusion

As LLMs become more advanced and RAG systems grow increasingly sophisticated, the demands on infrastructure will continue to rise. Private cloud provides a long-term solution, ensuring businesses have the security, performance, and control needed to meet these evolving challenges. Cloudalize’s GPU Cloud Notebooks add an additional layer of usability, empowering teams to prototype, refine, and deploy AI solutions in a collaborative, high-performance environment.

By leveraging private cloud, organizations can confidently adopt transformative technologies like LLMs and RAG, knowing their data is secure, their resources are scalable, and their teams are equipped with the tools they need to innovate.